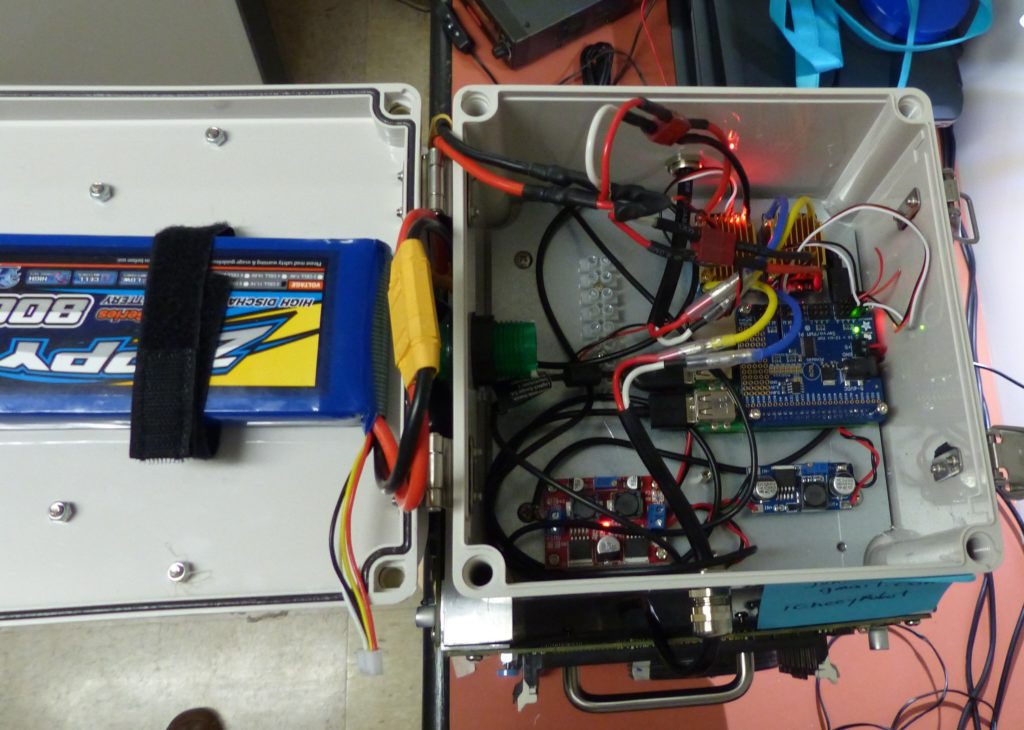

Work has begun to create a robot for dispensing short stories at the touch of a button for Writers Week in 2020. The Storybot will be located in one of the Port Adelaide Enfield libraries and will print from it’s collection of pre-curated stories onto paper via a thermal printer for library patrons to take away with them.

Design

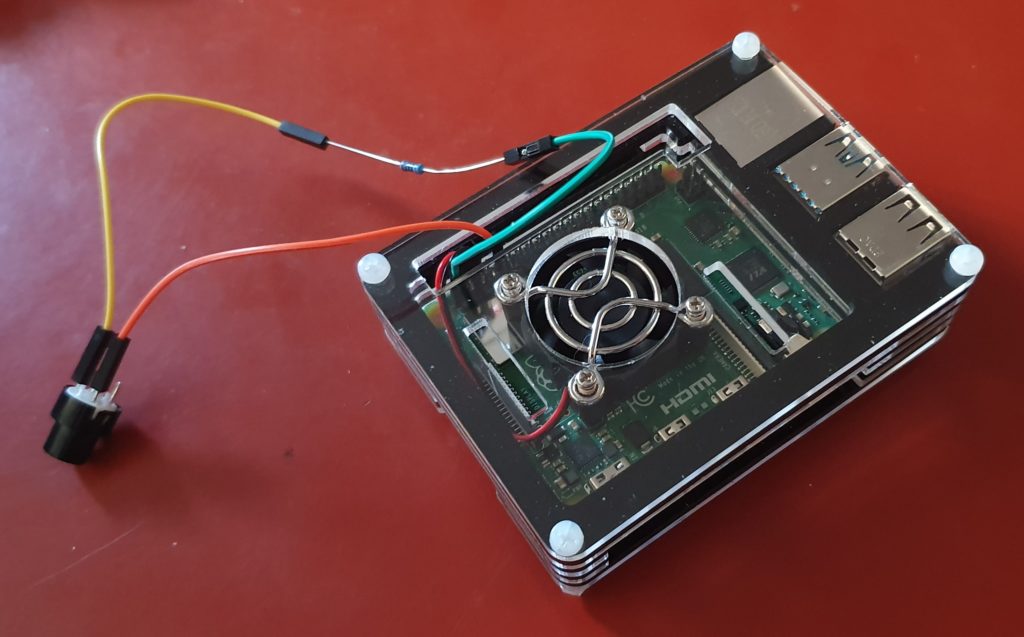

The initial design of the Storybot uses the following parts:

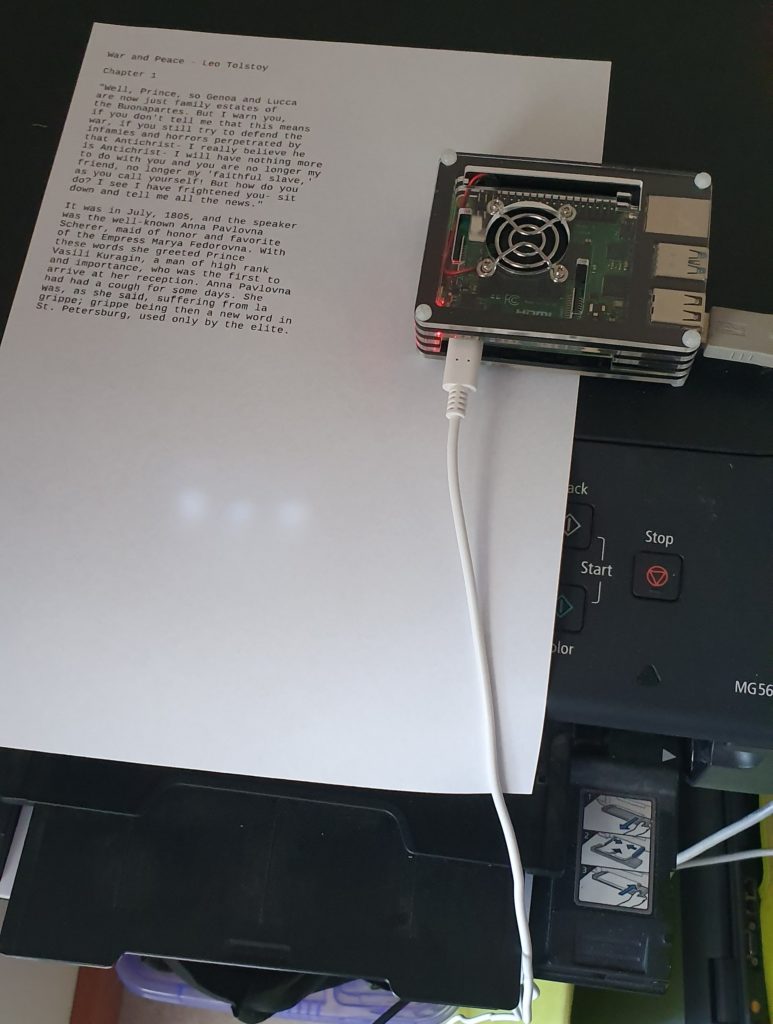

- Raspberry Pi 4, with MicroSD card

- Thermal Receipt Printer, USB (24V)

- Button, resistor (220 Ohm) and connecting wire

- Power supply (for Rasperry Pi and Printer)

- Enclosure and stand

Intial print testing was done with a regular Canon USB Pixma printer.

Software

A repository of the code created for the Storybot can be found on GitHub. This repository ‘storybot’ is cloned directly into a subdirectory of the ‘pi’ user (in our case we are using ‘Document/git’).

The Raspberry Pi used has the most recent Raspbian installed which has been updated to the latest packages.

In addition, the CUPS Printing package has been installed, which allows Raspberry Pi to print to any of the supported printers including the receipt format thermal printer. This printer prints 40 column per row with a fixed width.

A quick solution[1] for selecting a random story used the ‘fortune‘[2] package.

Stories are copied to a directory on the Raspberry Pi as individual ASCII text files. Some example files have been created for testing purposes[3]. A global data file and index is created from these files, while also reformatting them for a 40 character line length. Fortune reads these files, and will extract a story and print it.

Story management is driven by a global Makefile with the following targets:

make help - this message (default)make story - display a storymake prepare - compile the stories into data file(This will be run automatically, if required.)make list - list story filesmake clean - reset system by removing generated filesmake status - display system status

This system allows dependencies to be managed, so that ‘make prepare’ would be run if it is required and hasn’t yet been run, before ‘make story’. This allows new story uploads to be done even while the system may be printing.

Printing is done by sending (piping) the story output directly to the default printer with

make story | lpr

To detect a button press, where a button has been wired up to a GPIO pin, there are several options available. These still need to be investigated, but they include: Node-RED, WiringPi (C code and used in a previous project) or RPi.GPIO (Python)

- Node-RED: This is a large-ish application, but it works well and does not require any addional configuration on system start-up and shut-down.

- WiringPi: This C/C++library is useful for creating lightweight binary programs, but requires additional configuration to enable the created files to restart on reboot/startup. It is no longer being activity supported by it’s developer, but is open source software.

- RPi.GPIO: Python library that together with Python makes writing scripts that interact with the GPIO on the RasberryPi very easy.

Each of these approaches will need to take care of button bounce/noisy inputs, and stop multiple press events happening in a short period. Some hardware conditioning may also be necessary.

Notes:

[1] Very quick. There are no options for recording any statistics about which stories are printed, or when, which might be a useful thing to record and report on.

[2] The fortune package has a very long history, originally appearing in very early editions of UNIX operating systems.

[3] Stories fro testing were created from the inital paragraphs from “The Princess Bride”, “War and Peace” and “A Tale of Two Cities”.